Sample myths you need to stop believing

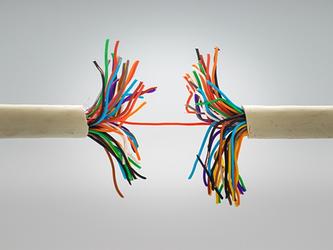

Good researchers are labouring under myths that stand in the way of ensuring sample quality. These myths range from the simple and outdated belief that double opt-in panels remain the ‘gold standard’ and real-time/river sample is bad, to the dangerous belief that fraud is a small ‘bot problem'. It is time for us to reset our understanding of good sample practices.

Let’s start with a statistical truth: there is no methodological reason to prefer double opt-in recruitment – where the respondent opts in via an initial form and is then sent an automated email to confirm that they want to join the panel – to river, where the potential respondent is driven to an online portal and screened for studies in real time.

The industry willingly dived into the pool of convenience sampling years ago. Without going into detailed explanation, negativity has haunted river sample for years, while double opt-in panel participation has plummeted. But none of this matters – below are the dimensions that do.

Breadth of recruitment sourcing

Forget this: People who commit themselves to panels provide more accurate data.

Believe this: A breadth of sources is most appealing to clients seeking diverse audiences and avoids the ‘professional respondent’ issue.

Ask your provider this: How many recruitment sources do you work with, and what share of your completes are provided by the top suppliers?

In today’s convenience sampling world, finding respondents from multiple sources is a practical solution for creating diversity. It addresses the problem of ‘professional respondents’, which has as much to do with sourcing participants as it does the panel value proposition. Traditional panels tended to focus on a small number of recruitment partners because finding a good supplier was difficult, account management and reporting were manual and cumbersome, and pricing would always improve with greater business.

Now, the respondent ‘supply chain’ can be completely automated and thus optimised to the benefit of all parties. When recruitment is done right, sample suppliers source from hundreds of partners of all types, providing diversity while minimising biases that arise with concentrations of similar types of people.

DYNAMIC FRAUD DETECTION

Forget this: Fraud is small and it’s mainly bots that can be stopped through address validation, digital fingerprinting and trap questions.

Believe this: Fraud is conducted by skilled humans using automation to massively attack systems and nothing the industry has used to date is sufficient to deter it.

Ask your provider this: What concrete measures do you take to control fraud? (If they tell you they do all the ‘standard’ things, run away.)

Conventional wisdom suggests that between five to 20 per cent of completes in a given survey might be fraudulent. That’s a lot. If that were not bad enough, most of it gets detected after the project is out of field.

Today’s fraudsters are no amateurs. They are men and women using sophisticated tools. Because of this, static techniques – from address validation to trap questions to digital fingerprinting and even the industry’s off-the-shelf solutions – are individually and collectively insufficient.

Fortunately, there are techniques one can use to turn the tide. Artificial intelligence (machine learning and deep learning in particular) can sift through the massive amounts of data and detect the ever-changing profile of fraudsters. Dynamic automated methods are essential here.

real-time optimisation of respondent experience

Forget this: Respondent experience is mainly about rewarding the panelist appropriately and that the survey works correctly. A poor experience arises chiefly because of bad survey design.

Believe this: While survey design plays a role, respondent experience is about every facet of the respondent lifecycle.

Ask your provider this: What proactive measures are you taking to ensure respondent engagement and satisfaction? How much control are you giving respondents over their own experiences?

It is not exactly a secret. Sample quality is poor because the respondent experience stinks. The industry has a ton of research on research showing that a bad experience leads to bad data. It’s time to look beyond questionnaire design and mobile readiness when it comes to this issue. There is plenty of blame to go around.

Rather than getting into the weeds of survey design, the simple solution for sample suppliers is to monitor the project in field, capturing data from field statistics to respondent ratings of the study, and deal with it accordingly. Studies that have good respondent experiences, which we can define simply as those where respondents complete a survey efficiently and with minimal pain, see greater sample flow. Bad experiences, no matter their length or design elements, progressively restrict sample flow. These studies should be quarantined to minimise participant impact, and if they are bad enough, they should be shut down. Automation allows all this to happen in real time.

The online experience and the technology that enables it have changed dramatically since market research moved online some 20 years ago. The challenge in periods of great change is determining which beliefs remain true and which need adjusting. An honest assessment of panel recruitment methodology and practices reveals no theoretical justification for preferring double opt-in recruitment over real-time sampling, or vice versa. What is most important is that buyers ensure their suppliers are recruiting and retaining real, attentive and diverse people, not by relying on mythical shortcuts, but by asking the right questions and demanding new approaches.

JD Deitch is chief revenue officer at P2Sample

We hope you enjoyed this article.

Research Live is published by MRS.

The Market Research Society (MRS) exists to promote and protect the research sector, showcasing how research delivers impact for businesses and government.

Members of MRS enjoy many benefits including tailoured policy guidance, discounts on training and conferences, and access to member-only content.

For example, there's an archive of winning case studies from over a decade of MRS Awards.

Find out more about the benefits of joining MRS here.

0 Comments