AI-moderated interviews explained: how conversational research delivers high-qualitative data at scale

For years, researchers have optimized survey layouts, shortened question grids, and refined sampling logic. But today, a different challenge is rising: open-ended answers are getting shorter, drop-out rates are climbing, and respondents are increasingly disengaged.

The question isn’t just how to improve surveys. It’s how to use new technologies to collect data at scale without losing the depth and nuance that human conversations provide.

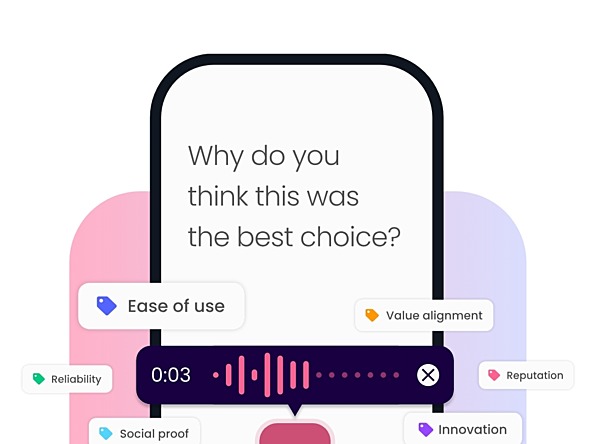

That’s where AI-moderated interviews (AIMIs) come in. Pioneered and tested at Glaut, AIMIs are AI-native interview flows that probe, clarify, and adapt in real time, generating both structured metrics and rich open-text responses. In essence, they bring together the scalability of quant with the contextual intelligence of qual.

Merging qualitative depth with quantitative structure

AIMIs offer a third option in research design. They use conversational AI agents to run interviews in voice, text, or hybrid form, adapting their flow in real time based on participant input. That adaptivity yields richer responses than static forms and far less “gibberish” or low-effort content.

At the same time, AIMIs generate structured outputs: numeric ratings, scale questions, and timestamped metadata are all part of the same flow. Researchers can blend depth with consistency, capturing the nuance of open expression alongside the measurability of survey metrics.

In essence, AIMIs allow researchers to scale up qualitative insight without giving up the logic, structure, or analytical throughput that quantitative work depends on.

Faster fieldwork, cleaner data: operational advantages of AIMIs

Glaut’s internal first comparative study between ai-moderated interviews and surveys shows that:

● Respondents using AIMIs gave 129% more words, on average.

● Gibberish was cut in half.

● Valid completion rates rose from 39% to 61% without sacrificing user satisfaction.

These performance gains don’t come from novelty alone. They stem from the mechanics of conversation: when people feel heard rather than processed, they tend to stay, elaborate, and contribute more useful data.

Faster fieldwork, cleaner data: operational advantages of AIMIs

From a delivery standpoint, AIMIs offer speed and consistency gains that matter:

● Parallel interviews mean no scheduling bottlenecks. Fieldwork that once took weeks can now run overnight.

● Built-in fraud detection flags poor-quality inputs before they pollute the dataset.

● Automated analysis pipelines extract themes and sentiment as data comes in, reducing turnaround time dramatically.

And yet, participants consistently rate the experience as natural, engaging, and non-judgmental, particularly in voice-based formats. This balance of automation and empathy is what makes AIMIs more than just a tech novelty. It’s a methodology built for how people actually want to express themselves and how researchers actually want to use the results.

Glaut’s mission in research

At Glaut, we don’t see AIMIs as a replacement for human-moderated interviews. Instead, they complement both IDIs and surveys, offering a flexible, efficient option when teams need to scale qualitative insight, capture richer verbatims, or enhance mixed-method designs with conversational input.

As adoption grows across industries – from consumer goods to healthcare to media – we’re continuing to validate the method in new languages, populations, and use cases. The ambition is not to hype AI, but to rebuild how we ask questions, so that research can be both faster and more meaningful.

For those exploring this space, our first comparative benchmarks and design principles are published in Glaut’s AI-moderated interviews whitepaper.

Matteo Cera is co-founder of Glaut.

We hope you enjoyed this article.

Research Live is published by MRS.

The Market Research Society (MRS) exists to promote and protect the research sector, showcasing how research delivers impact for businesses and government.

Members of MRS enjoy many benefits including tailoured policy guidance, discounts on training and conferences, and access to member-only content.

For example, there's an archive of winning case studies from over a decade of MRS Awards.

Find out more about the benefits of joining MRS here.

1 Comment

Anon

6 months ago

That’s an interesting point. I also believe the challenges surveys face with fraud and low-quality data could be addressed by leveraging AI, especially Conversational AI

Like Reply Report