OpenAI seeking to mitigate risks of more powerful AI models

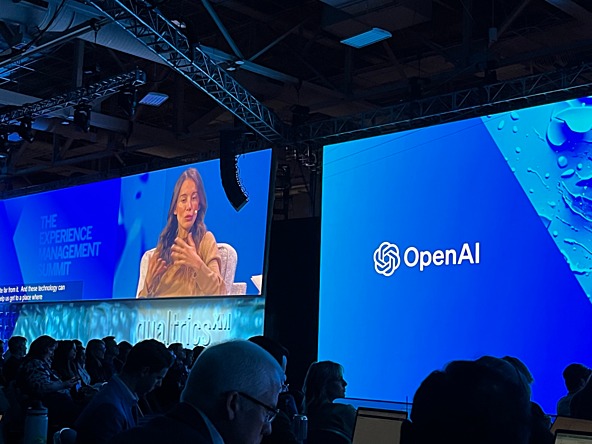

Speaking at the Qualtrics X4 conference in Salt Lake City yesterday ( 1st May), Murati said that it was striking how quickly generative AI had “burst into the public consciousness, and how that is affecting the regulatory framework”, adding that governments, regulators and civil society were examining how AI could be used as safely as possible.

Speaking with Qualtrics president of AI strategy Gurdeep Singh Pall, who joined the company last month from Microsoft, Murati said that the next ChatGPT would be a further step up.

“From GPT3 to GPT4, there was another emergence of capabilities in reasoning and different domains,” Murati explained. “We should expect another step change as we go into the next model and scale up more.”

She added: “There’s a very big opportunity here for these systems to completely transform our relationship with knowledge and creativity. That can be applied to every domain.

“We will be delegating more and more tasks to AI systems over longer and longer time horizons, and the tasks will get more and more complex.”

With more powerful systems come greater risks, and Murati said that safety was a key focus of OpenAI as it develops AI’s capabilities.

“Of course we need to develop the capabilities of these models, and make them more powerful, robust and useful,” Murati said. “But hand in hand with that goes also the safety of these models, and making them more aligned with human values and making sure that they are doing the things we are trying to do, whether that’s an explicit direction or implicit direction.”

Murati said OpenAI wants to avoid harmful misuses of AI or “catastrophic risks, where the models get so powerful, we lose the ability to understand what is going on and the models are no longer aligned with our values”.

To make sure this issue is addressed, the company was deploying systems early when less powerful so the impacts on specific industries and companies could be examined and risks mitigated.

“We believe that it is very important to bring industry, government, regulators and broader society along,” she added. “But not as passive observers, but as active participants in understand what these technologies are capable of.”

A team at OpenAI is looking at the risks of misuse, Murati explained, adding that while “it is never going to be the case we will bring the misuse or the risks down to zero”, “we should do everything we can to minimise the misuse”.

There is also a team focused on the long-term catastrophic risks that could emerge from models “that are so powerful we could no longer supervise them”, Murati added.

Murati said she wanted to develop generative AI “in partnership with everyone else” and bring the technology into the public consciousness “when the stakes are low, and partnering with industry, government and civil society to figure out how to make the technology more robust for general use”.

When asked what the future of AGI – artificial general intelligence for humanity – could look like, Murati said the technology will get more powerful, with no evidence showing its upward trajectory will cease anytime soon.

She added that speech, video and images, both perception and generation, are coming into these models, with a desire to help AI understand the world like a human.

“If we are making great progress towards our mission, it should hopefully be a future where we feel more enabled by this technology and our knowledge and creativity is more enhanced,” Murati concluded.

“Of course, it will change the meaning of work and how we interact with everything – information, each other, ourselves – but I am optimistic if we get this right, it will be an incredible future.”

We hope you enjoyed this article.

Research Live is published by MRS.

The Market Research Society (MRS) exists to promote and protect the research sector, showcasing how research delivers impact for businesses and government.

Members of MRS enjoy many benefits including tailoured policy guidance, discounts on training and conferences, and access to member-only content.

For example, there's an archive of winning case studies from over a decade of MRS Awards.

Find out more about the benefits of joining MRS here.

0 Comments