NEWS19 February 2018

All MRS websites use cookies to help us improve our services. Any data collected is anonymised. If you continue using this site without accepting cookies you may experience some performance issues. Read about our cookies here.

NEWS19 February 2018

UK – Video intelligence platform LivingLens, has launched advanced recognition capabilities to improve consumer video content analysis.

The launch includes facial emotional, tonal and object recognition. The new capabilities supplement its existing video intelligence suite.

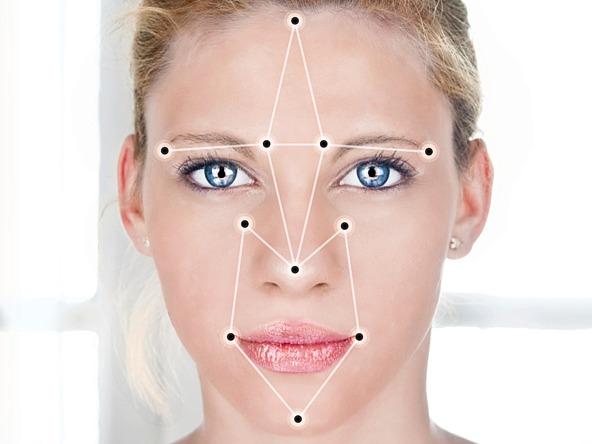

The company said artificial intelligence identifies key landmarks and expressions of the human face and then deep learning algorithms analyse the information to classify facial expressions within video content which is then mapped to a specific emotion.

Tonal recognition gives insight into the way people are communicating, advancing analysis beyond ‘what’ people are saying to ‘how’ they are saying it. The tone in which words are spoken can reveal additional understanding of how consumers are feeling.

Object recognition helps to determine where consumers are, be that in a shop, at the airport or in a kitchen for example, and therefore what they are doing.

The three new recognition capabilities are time stamped against the corresponding video content to allow researchers to easily pinpoint the exact moments of interest. Results are returned within seconds, making the analysis of video content possible in near real-time.

Carl Wong CEO said: “Our mission is to unlock the insight in people’s stories to inspire decisions and technology is allowing us to accelerate our ability to interpret consumer video content at scale. Where once video was limited to small scale studies, it’s exciting to see projects with large volumes which simply weren’t practical before.”

Related Articles

0 Comments