FEATURE23 August 2012

All MRS websites use cookies to help us improve our services. Any data collected is anonymised. If you continue using this site without accepting cookies you may experience some performance issues. Read about our cookies here.

FEATURE23 August 2012

With panel companies under pressure to cut costs, Research Now’s Chris Dubreuil and Mike Murray set out to quantify the impact that lower incentive levels would have on drop-out rates and respondent experience.

The problem of incentives for online research has troubled the research industry for a long time. We feel intuitively that a higher incentive will encourage a better response and get us better data quality. And we also feel that appealing to the better nature of some participants (such as higher income groups and business respondents) through charity donations for example, will again lead to lower drop-out rates and better data quality. But are our intuitive feelings right?

And how much do incentives really matter? We know that there are plenty of other factors besides incentive levels that affect the quality of data generated by surveys. A 2010 study by Research Now found that badly designed questionnaires have a tangible short term effect on data quality and a larger, medium term effect on overall panel quality – with attrition projected to be far more pronounced for those panellists who aren’t engaged.

We identified four areas where the industry needs to raise its game for online engagement; we need to respect and trust survey participants; go back to basics for questionnaire design; collaborate on what works online and use new techniques (HTML 5, ‘gamification’) to optimise the survey experience.

Slowly but surely, we are seeing positive gains, but the incentive issue has again reared its head thanks to the economic pressures faced by businesses. This year has seen constant pressure applied to panel companies and sample providers to cut prices, and lowering incentives is one way to do this.

At Research Now we believe it is important to incentivise our panel members for every survey, and feel sure that to do so using the correct methodology and incentive level is very important to quality of data. But where does the trade-off come? Put bluntly, what is most important: quick, cheap un-incentivised research, or research where a higher priority is placed on rewarding the respondent for their time?

In searching around, we could not find any recent research showing how people really want to be rewarded for taking part in surveys. There was no data available on any demographic or national differences in how to motivate people in an unbiased manner.

So we decided to do a multi-country research-on-research study to find out which incentives really rocked participants’ boats and which left them cold. We designed our study to find out what was the preferred incentive model, how that affects respondent satisfaction, how it influences drop-out rate and the overall impact on the quality of data.

The research was carried out in June in six markets globally: the UK, USA, Canada, Australia, France and Germany. Using the Olympics as the topic of the survey, we designed a 16-minute questionnaire which stayed consistent across all six markets. To provide sample, we used our proprietary online access panels along with our own social media sample.

Our online access panel sample is recruited online and we frequently ask them to contribute to general consumer surveys. These panellists receive a cash reward for participating ranging from 50p to £5. For the purposes of this survey, the standard incentive was 75p.

Our social media sample acts as a source for recruiting consumers who do not typically communicate via email, for example, 18- to 24-year-old males are over-represented. By engaging social media users who are using an application within a site (such as Facebook Credits to rent online films, magazines, or as currency for online games) we offer these members currency for that particular application, making the incentive relevant and attractive.

We typically use a representative mix of both these sample sources to make sure we get complete coverage. Clearly, if you use only one source (such as an access panel) there will always be sub-segments of the population who aren’t represented that well, because of the channel we are inviting them through.

As we learnt from our 2010 survey, we knew we had to provide a well-designed survey experience. We needed the quality of the survey to be a ‘constant’ so response rates did not suffer and drop-outs increase because the questionnaire was unengaging. We needed the focus to be on the incentive.

“Panel companies and sample providers are under constant pressure to cut prices, and lowering incentives is one way to do this. But what is most important: quick, cheap un-incentivised research, or research where a higher priority is placed on rewarding the respondent?”

To ensure a safe and robust sample, each country was balanced using hard quota targets matched to general population statistics, according to social grade, age, gender, income and presence of children. We then split and again balanced the sample per market into three cells – cell one offering the standard incentive, cell two at a level 33% lower than the standard and cell three 33% higher.

Although each cell had a set incentive, we asked each respondent a separate question as to what incentive they would have preferred, given the choice of cash, vouchers, charitable donations, social media credits, entry into a sweepstake or access to the survey findings.

We generated a robust set of data, with 7,200 completed surveys globally ( 1,200 per market) and with quotas set to population statistics, not online statistics. To make sure we were maximising subject engagement, all of those interviewed had stated that they would be watching at least some of the 2012 Olympic Games. The incidence rate was 78% across all markets (with minimal fluctuations across some markets such as France and Germany showing they have a slightly reduced interest in the Olympics).

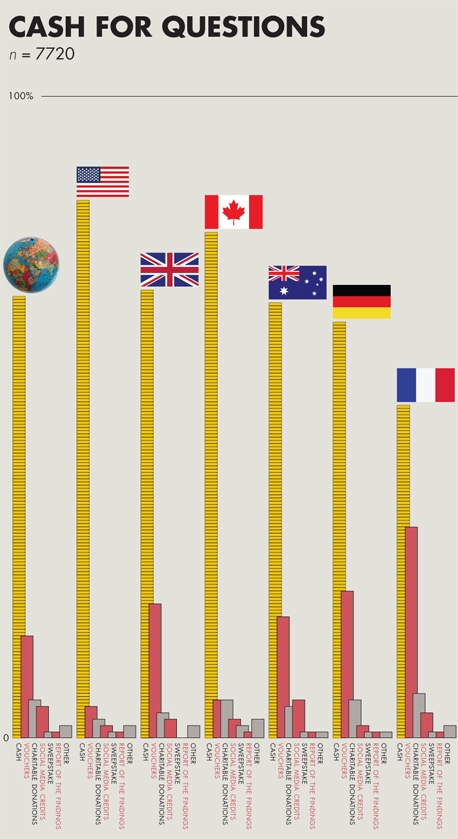

Seven out of ten respondents opted for cash when asked which incentive they would prefer to receive, making it overwhelmingly the most popular form of incentive overall, in all countries and across all social grades. After cash was vouchers, followed by charity donations, but this was relatively low across the board – even taking into account higher social grades and business respondents.

For this survey, it seems, getting to see the results of the survey was of little interest to our respondents (see chart below – click to enlarge).

There were some interesting national differences worth looking at, however. For cash specifically, 84% of US and 79% of Canadian participants preferred cash; the Europeans slightly fewer. Overall, 65% of German participants were primarily interested in cash and just 52% of French. In these markets, vouchers and charity donations were a little more popular. And among older participants aged 55 and over, vouchers were slightly more popular – reflecting a tendency among this group of respondents to use surveys as a way of earning vouchers to gift as presents to friends and family members. A little altruism at last.

Our study showed, as you might expect, that a fairly incentivised respondent is a happy respondent. Asked if the reward was fair compensation for taking part, 80% of participating panel members agreed (or strongly agreed) that we rewarded them fairly – with people in the higher-incentive group agreeing more strongly than those in the lower-incentive group.

More interestingly, we found that different levels of incentive influence the respondent’s perception of the quality of the survey experience itself. As we have explained, we took considerable care to produce a good questionnaire experience, but even taking that into account the higher incentive did indeed correlate with a reportedly better survey experience, with 87% of the high incentive group agreeing as opposed to 84% of the lower incentive group – significantly different.

In addition to overall satisfaction, we also found that incentive rates have a small influence on the respondent’s perception of survey length. The more you pay as incentive then the happier the respondent is in terms of thinking the length was acceptable – overall 89% of the high incentive group felt the survey length was acceptable, as opposed to 86% of the lower incentive group. Again, this is significantly different.

Better incentives give a better impression and improve satisfaction but how do they affect survey drop-out rates? Drop-out rates did increase with lower incentive rates, but not by huge amounts – 29.9% in the high-incentive group, 33.2% in the normal group and 36.7% at the lowest incentive level. Does that mean we can safely pay our respondents less and less, and drive down the value of our cost per completes? Clearly not.

Our previous study showed there is a tangible, negative cumulative effect if you consistently deliver a bad survey experience to a participant. We modelled this by looking at ‘likelihood to participate in future surveys’ administered after every completed survey on Research Now’s online access panels. The result is that after 30 consistently negative surveys the panel would be 60% of the size of a panel receiving entirely positive survey experiences.

Panel dynamics are far more complex than this, but the underlying message is clear: cumulatively undercharging a panellist, and not fulfilling an appropriate, respectful trade-off for time spent online is considerably damaging to the likelihood of participating in further research. Add in consistently poor questionnaire design and longer questionnaires, and the results are much worse. We have a duty to make sure that surveys are relevant and engaging and that appropriate rewards are given. Otherwise we are just building houses on sand.

Of course, appropriately incentivising respondents is only one way to maintain panel integrity and the goodwill of respondents. As we know, survey engagement is a bit of a knotty issue, encompassing use of rich media, channel preferences, survey length, overall panel management and the number of surveys respondents are asked to do.

In our view the cumulative effect of poor design, poor panel management, irrelevant surveys, high screen-out rates, long surveys and low incentives combine to reduce data quality and increase respondent fatigue. And they’re not just growing tired with one particular research or panel company – bad interactions with respondents give our industry, as a whole, a bad name.

Today, research companies interact with more consumers than ever before. Contact has increased rapidly over the last five years and our footprint is about to get even larger with the advent of mobile as a sustainable research channel. We have an amazing opportunity to position what we do in a very positive light. Let’s make it all about respect for and understanding of our participants.

If we don’t, then we are fated to see the global pool of those willing and able to take part in primary research studies shrink while at the same time appetite for data grows. The industry faces a huge potential headache if we ignore the needs of the participant.

Chris Dubreuil is senior vice-president and Mike Murray is head of project consultancy for Research Now

Andrea McGeachin

It’s easy to understand the success of online research. It has enabled businesses to gather insight from ever wider pools of respondents, with speed and relative ease of data capture. But rewarding panellists for their work is easier said than done. With more and more panels being made up of global representatives, there is the added complication of sending money overseas, negotiating national payment regulations and currencies.

Panellists are unlikely to remain motivated unless they are getting regular payments for their time so remuneration is a critical business process that is damaging to overlook. Mainstream bank services lack the flexibility to support businesses with these lower value, higher frequency international payments, and although the payment options available to market research companies are varied, they are out of step with the need for faster, simplified payments.

Many organisations still use voucher-based rewards. This obviously restricts the recipient to spending on a single website – like Amazon, say – but it can be an easier method to overcome currency challenges. However, one of the most common means of paying online panellists is through cheques. Not only is this an incredibly intensive administration process for the market research firm, it is also highly inefficient for the panellist.

One option for market research companies is to use prepaid card schemes. Prepaid cards work just like a regular debit or credit card except they have to be preloaded with money before they can be used. So if a panellist wants cash, the market research firm can issue the panellist with a physical prepaid card which can be used at an ATM to withdraw money. Every time the panellist completes some work for the market research firm, the card can be loaded with money. Virtual cards can also be issued if panellists prefer to spend their money online. These can be used at any online merchant that accepts Visa or MasterCard.

It is possible to integrate prepaid card payment processes with panel management software. This allows companies to manage their accounts, see who needs to be paid and send payments all in one place. In addition, panellists can access an online portal to track their income.

Andrea McGeachin is commercial director at electronic payment services company Ixaris

3 Comments

NickD

12 years ago

Interesting article, and very interesting study, but (IMHO), I'd suggest you're overplaying the importance of incentives. "Panellists are unlikely to remain motivated unless they are getting regular payments for their time" While I appreciate from years of experience that *some* reward is better than none, if we think rationally about the average hourly wage and the average survey duration, there's a huge disconnect between incentives on offer and 'fair' remuneration for the time spent. It still surprises me that affluent respondents settle for such low incentives; but I believe very strongly that the survey design and subject matter is far more important than the incentive. £1 a survey is very nice, but in reality £10 for 3 months' work of answering 20 minute surveys every 10 days is not really the driving motive, particularly for cash-rich, time-poor respondents who can be tricky to reach anyway. Interesting studies set up in engaging ways are more of value - even the 'fun' of picking holes in poor surveys has started to pall for me after a while...!

Like Reply Report

jbs

12 years ago

This is interesting, very relevant even, but makes the assumption that somehow all costs for sample are related to sample themselves. There are several ways of keeping costs down. Locating sales offices out of city centres is one, having pms/quote staff in low cost countries another. And dare I say it not having highly paid middle managers is another, as is keeping conference visists down to a minimum. At the end of the day there are several ways of responding to price pressure. A good panel company will keep incentives high and cut on the other things mentioned if necessary. Sample quality is key to success in this business.

Like Reply Report

Chris Dubreuil

12 years ago

Thanks Nick Certainly, an interesting subsequent study would be to measure the weight of engagement delivered by incentive, subject matter and survey design engagement - they are interrelated, and all important, but to what degree? It is always a fine line that we tread - we do not want to over incentivise - if panelists are getting wage equivalent for survey taking we get fraudulent behaviour - simple as that. We cannot and should not discount the role of incentive, survey design engagement, relevance of subject, and screening in an unbiased manner to get to the right participant. My main point is with prices under pressure, the incentive payment can be seen as a lever to take down overall price, this is harmful. We need to make sure we are consistent and manage our panelists expectations, including respectful payments for time online.

Like Reply Report